| Home | PhD applicants | Research | Publications | Google Scholar | Teaching | Bio |

I work in the research area of computer vision with my PhD students. We have current research interests, areas that we wish to expand into, and past projects that we are happy to reopen listed below. If you are interested in working with me in any of these areas, for example, as a PhD student, please contact me by email.

More information on each project can be obtained by clicking the links below, including downloadable publications.

|

The Headspace dataset : a dataset of 1519 3D images of the human head. This collection was planned and implemented by the Alder Hey craniofacial unit, under the direction of Christian Duncan (3D capture shown on the left). At York, we have organised and annotated the dataset to make it searchable, both in terms of subjects and image qualities, and we are distributing the dataset for research purposes. Several associated pieces of work are attached to this page such as Laplacian ICP (IEEE Face and Gesture, 2023), the MICA project (FLAME model, ECCV 2022), and the REALY benchmark (ECCV 2022). |

|

|

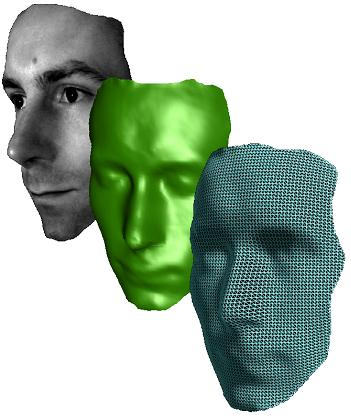

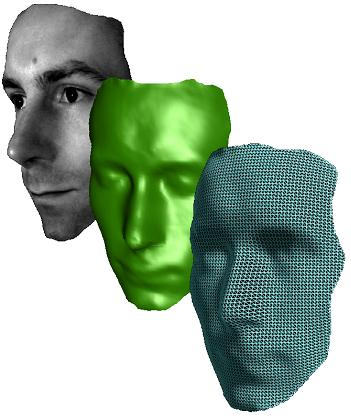

The Liverpool-York Head Model (LYHM) : A 3D Morphable Model (3DMM) of the Human Head. We have developed techniques in the area of 3D Computer Vision and Machine Learning to build statistical 3D shape models of the full human head, including face and full cranium. Hang Dai's PhD developed shape morphing techniques and applied them to the face as well as to the more difficult cranial area, which is devoid of features. In collaboration with Alder Hey Children's Hospital, we aim to place our models at the centre of a software toolset that helps clinicians plan and assess craniofacial interventions. |

|

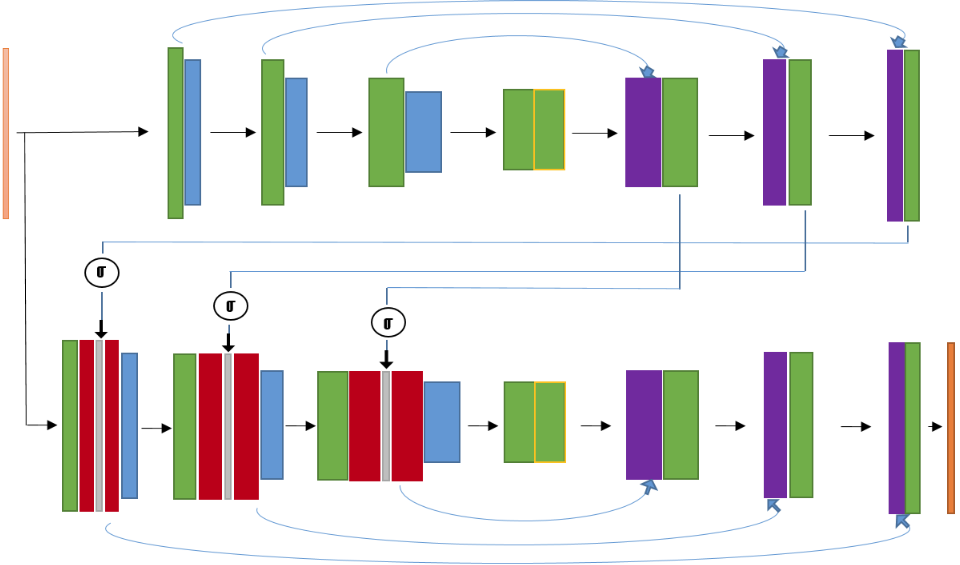

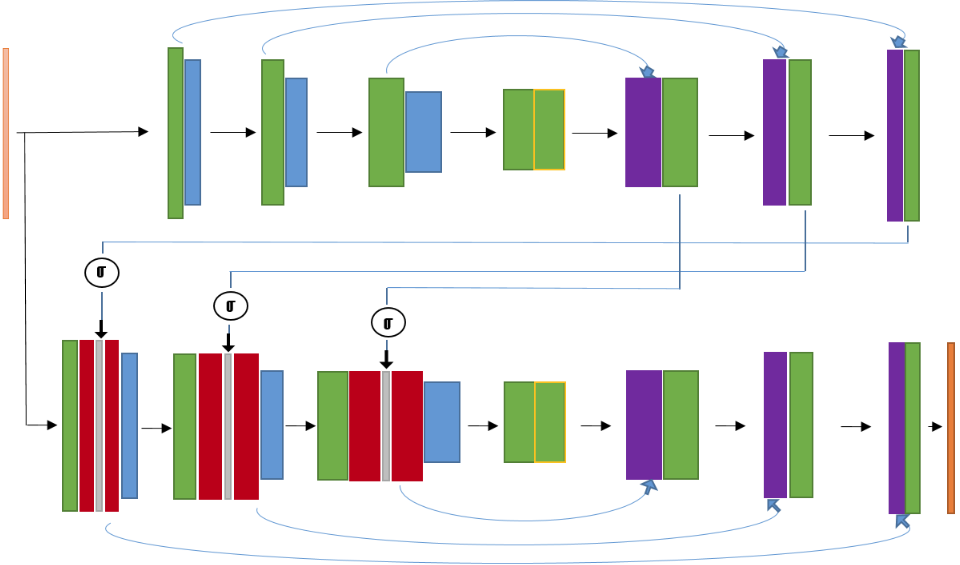

FocusNet : an attention-based fully-convolutional network for medical image segmentation The PhD work of Chaitanya Kaul developed a novel technique to incorporate attention within convolutional neural networks using feature maps generated by a separate convolutional autoencoder. The attention architecture is well suited for incorporation with deep convolutional networks. Our model is evaluated on benchmark segmentation datasets in skin cancer segmentation and lung lesion segmentation. Results show highly competitive performance when compared with U-Net and its residual variant. |

|

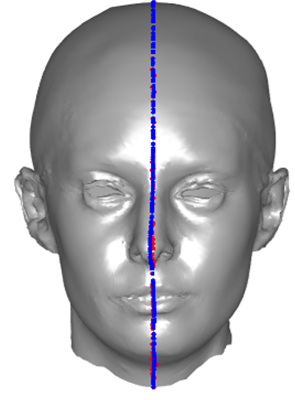

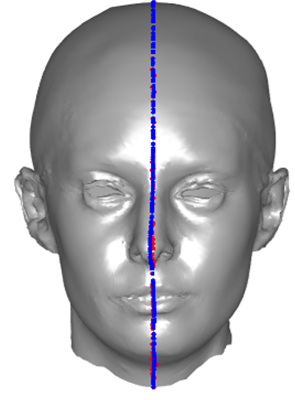

Symmetric shape morphing and symmetry-factored shape modelling. In Hang Dai's PhD, we have applied both symmetric morphing and symmetry-factored modelling to our 3D Morphable Modelling pipeline and demonstrated its effectiveness on the performance of 3D Morphable Models (3DMMs) of the human head. |

|

|

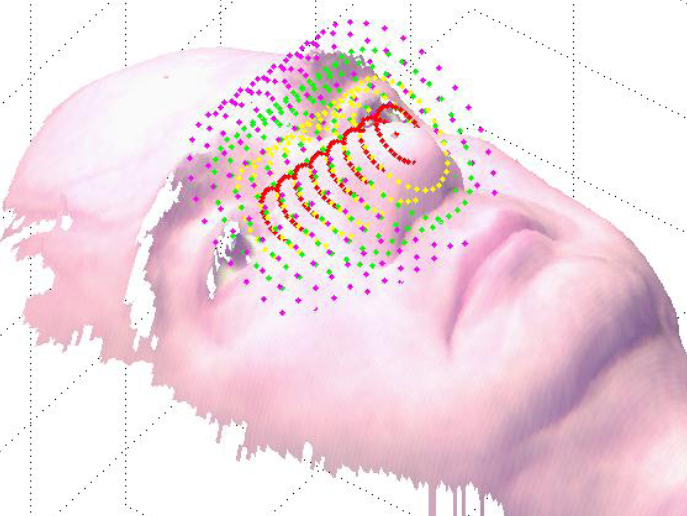

The York Ear Model (YEM) : A 3D Morphable Model of the Human Ear, with associated 3D ear dataset. Hang Dai's PhD has developed a 3D Morphable Model (3DMM) of the human ear, initially using a small set of 20 3D ear images, followed by a larger set of 2D ear images, mapped to 3D, to augment the model, such that 3D ear shape is captured over a much larger population (520). |

|

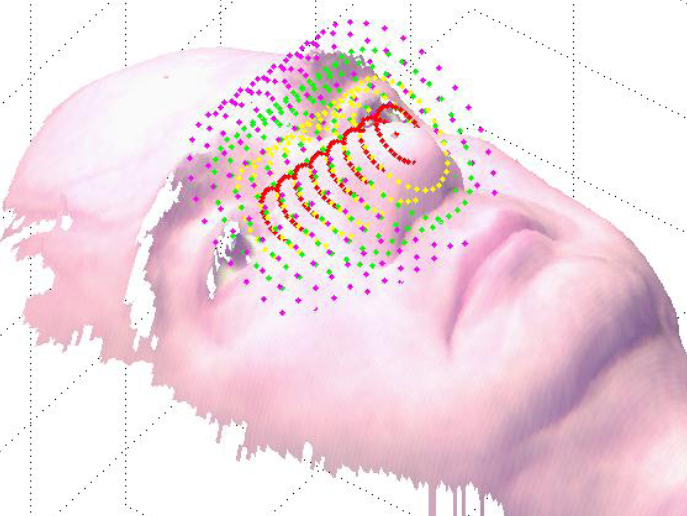

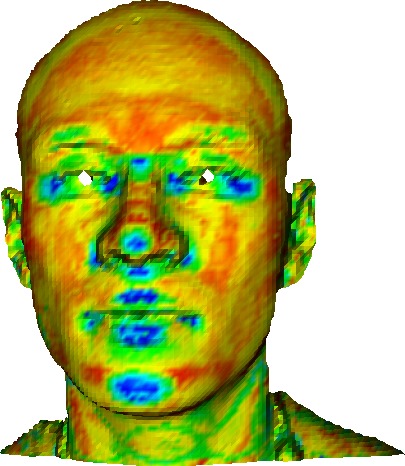

Keypoint Extraction and Landmarking of 3D Face Meshes. We use machine learning techniques to learn the properties that best distinguish facial landmarks from their surrounding (non-neighbourhood) vertices. Key techniques included in Clement Creusot's PhD are learning using Linear Discriminant Analysis and Adaboost to determine how to combine local descriptors. For extracting global structure of landmark positions, both model fitting and hypergraph matching techniques were investigated. The work was extended in Stephen Clement's PhD, where the model was flexed, different scoring functions were used, and different sample consensus procedures. |

|

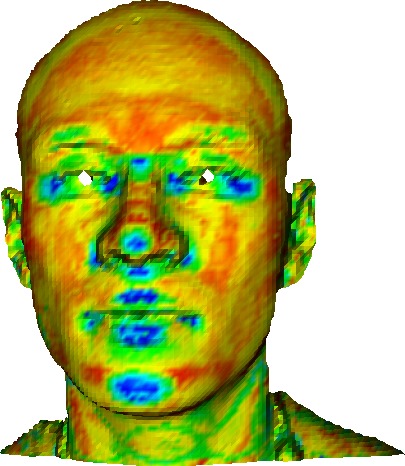

3D Local Shape Descriptors. We have designed several new pose invariant, yet discriminating 3D surface descriptors and used these in applications of 3D facial landmarking (Marcelo Romero's PhD) and 3D pose normalisation (Tom Heseltine's PhD). |

|

3D SLAM for mobile robots. Recently we have worked on Simultaneous Localisation and Mapping for mobile robots using the Kinect 3D camera (Hao Sun's PhD). The work calibrated and characterised the performance of the Kinect and then extracted scene planes from 3D scans using both RANSAC and the Hough transform. |

|

3D Face Recognition. We have employed specialised 3D cameras to collect 3D face data. Previous work in Tom Heseltine's PhD resulted in the development of a successful face recognition system for near frontal views of cooperating subjects. Part of that work collected the UoY 3D Face Dataset . Our current focus is to develop novel, robust techniques to recognise faces at a distance, with non-cooperating subjects. |

|

Vision-based HCI. We have worked on the interaction between mobiles (eg. smartphones) and intelligent displays. We wish to build on our novel concept of registered displays, where a touch screen click on a mobile is projected onto a distant display, this is now known as 'touch projection'. This allows you to interact on the mobile as if you were interacting directly on the distant display itself. The core technology to allow this to work is image registration, where a copy of the mobile's captured image of the distant display is transmitted to that display's PC via a wireless link (bluetooth or wifi) for matching. |

|

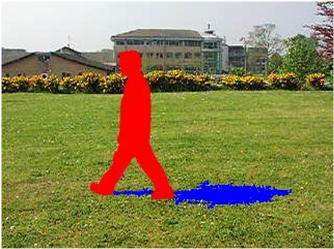

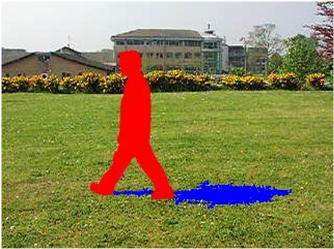

Vehicle Segmentation and Recognition. We have developed techniques to automatically recognise the make and model of cars, lorries, and vans from standard (2D) video. This is an extension of our TSB funded CLASSAC project. |

|

Human Action Recognition. We have developed techniques to automatically recognise human actions from standard (2D) video. This is an extension of our TSB funded VIDEOWARE project and also uses segmentation work developed in the TSB funded CLASSAC project. |

There are several research areas that we wish to apply our expertise in. Some of these are listed below and you may wish to investigate the possibility of doing a PhD in one of these areas.

Past projects that are still of interest and relevance are listed below in reverse chronological order. Many of these have been funded by TSB, DTI or EPSRC. Although these projects are not currently active, we welcome PhD applicants who wish to build on our experience in these areas,