Latest News

Updated regularly with the most recent developments related to my research and me.

-

17 Feb 2026Accepted paper: Formally Guaranteed Control Adaptation for ODD-Resilient Autonomous Systems

Ensuring reliable performance in situations outside the Operational Design Domain (ODD) remains a primary challenge in devising resilient autonomous systems. We explore this challenge by introducing an approach for adapting probabilistic system models to handle out-of-ODD scenarios while, in parallel, providing quantitative guarantees. Our approach dynamically extends the coverage of existing system situation capabilities, supporting the verification and adaptation of the system's behaviour under unanticipated situations at runtime. Preliminary results demonstrate that our approach effectively increases system reliability by adapting its behaviour and providing formal quantitative guarantees even under unforeseen out-of-ODD situations.

Click here to read about the paper ...

-

24 Oct 2025New pre-print: Out-of-Distribution Detection for Safety Assurance of AI and Autonomous Systems

Demonstrating the safety of autonomous systems rigorously is critical for their responsible adoption but it is challenging as it requires robust methodologies that can handle novel and uncertain situations throughout the system lifecycle, including detecting out-of-distribution (OoD) data. Thus, OOD detection is receiving increased attention from the research, development and safety engineering communities. This comprehensive review analyses OOD detection techniques within the context of safety assurance for autonomous systems, in particular in safety-critical domains.

Click here to access the paper ...

-

15 Sep 2025New Paper: Cautious Optimism: Public Voices on Medical AI and Sociotechnical Harm

Medical-purpose software and Artificial Intelligence ('AI')-enabled technologies ('medical AI') raise important social, ethical, cultural, and regulatory challenges. To elucidate these important challenges, we present the findings of a qualitative study undertaken to elicit public perspectives and expectations around medical AI adoption and related sociotechnical harm. Sociotechnical harm refers to any adverse implications including, but not limited to, physical, psychological, social, and cultural impacts experienced by a person or broader society as a result of medical AI adoption. The work is intended to guide effective policy interventions to address, prioritise, and mitigate such harm.

Click here to access the paper ...

-

15 Sep 2025New Paper: INSYTE: A Classification Framework for Traditional to Agentic AI Systems

INSYTE is our classification framework for traditional to agentic AI systems, designed to support cross-stakeholder communication, to inform design, development and deployment decisions, to facilitate safety engineering and assurance, to provide a classification system for regulatory and/or certification purposes, and to help inform decisions about liability

Click here to access the paper ...

-

15 Aug 2025New Paper: Agile Development for Safety Assurance of Machine Learning in Autonomous Systems (AgileAMLAS)

AgileAMLAS tightly integrates Agile software engineering, Agile systems thinking, and ML engineering with our proven safety engineering methodology, AMLAS. This novel approach extends AMLAS by weaving together software and ML engineering artefacts and processes, with safety engineering artefacts and processes, and creating a truly through-life approach. This through-life development and assurance lifecycle is a crucial missing piece in the current literature.

Our goal with AgileAMLAS is to provide practical guidance to designers, developers, and safety practitioners. AgileAMLAS provides clear, step-by-step guidelines for developing and deploying ML for autonomous systems using DevOps and MLOps principles.and for generating compelling safety cases using the established guidance from AMLAS.

Click here to access the paper ...

-

30 Jul 2025Blog: Autonomous robots in the field: enhancing solar farm safety and efficiency

Our work is developing robust safety assurance mechanisms and thorough validation processes to argue the reliability of these autonomous robots in dynamic, real-world environments. Our robots will adhere to stringent safety standards and regulatory frameworks to ensure risks are reduced as low as reasonably practicable (ALARP).

By developing a use case using our own on-site solar farm, we aim to demonstrate that we can safely use AMRs for accurate inspections, to trigger timely cleaning and maintenance to improve energy efficiency, to reduce fire risk, to predict where structural repairs are needed, and to extend the lifespans of panels.

Click here to find out more ...

-

17 Jul 2025Accepted Paper: Robustness Requirement Coverage using a Situation Coverage Approach for Vision-based AI Systems

AI-based robots and vehicles are expected to operate safely in complex and dynamic environments, even in the presence of component degradation. In such systems, perception relies on sensors such as cameras to capture environmental data, which is then processed by AI models to support decision-making. However, degradation in sensor performance directly impacts input data quality and can impair AI inference. Specifying safety requirements for all possible sensor degradation scenarios leads to unmanageable complexity and inevitable gaps. In this position paper, we present a novel framework that integrates camera noise factor identification with situation coverage analysis to systematically elicit robustness-related safety requirements for AI-based perception systems. We focus specifically on camera degradation in the automotive domain.

Click here to access paper ...

-

05 Jun 2025Accepted Paper: SCALOFT: An Initial Approach for Situation Coverage-Based Safety Analysis of an Autonomous Aerial Drone in a Mine Environment

This paper presents a testing approach named SCALOFT for systematically assessing the safety of an autonomous aerial drone in a mine. SCALOFT provides a framework for developing diverse test cases, real-time monitoring of system behaviour, and detection of safety violations. Detected violations are then logged with unique identifiers for detailed analysis and future improvement. SCALOFT helps build a safety argument by monitoring situation coverage and calculating a final coverage measure.

Click here to access paper ...

-

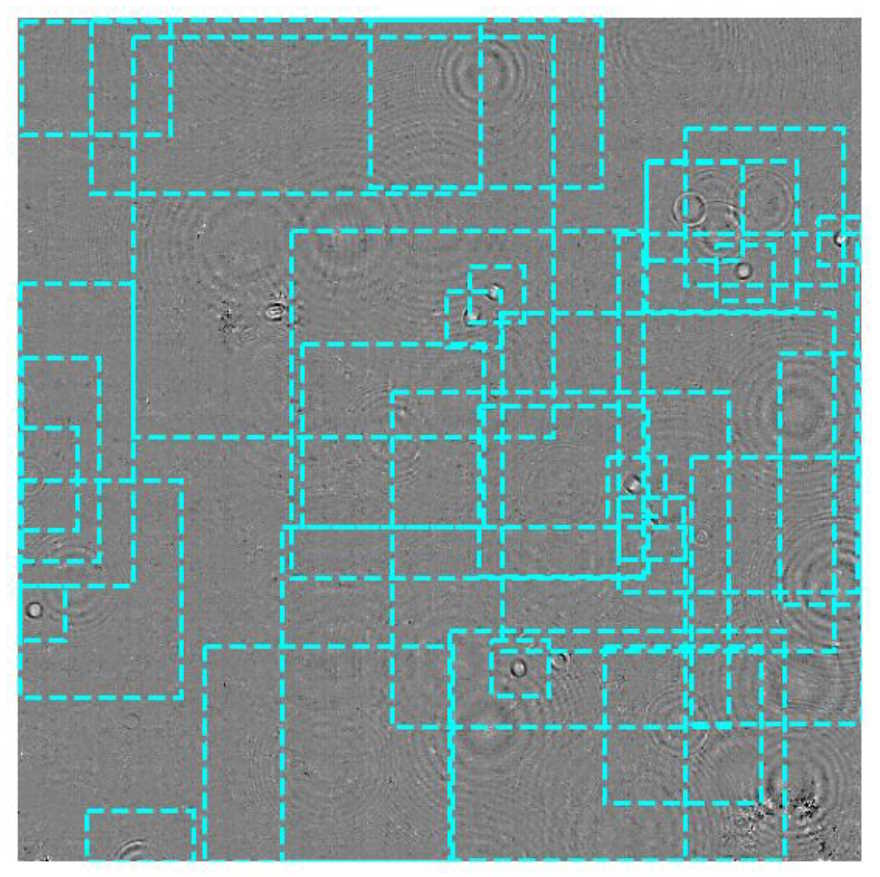

26 Apr 2024New Article: Real-time 3D tracking of swimming microbes using digital holographic microscopy and deep learning,

The three-dimensional swimming tracks of motile microorganisms can be used to identify their species, which holds promise for the rapid identification of bacterial pathogens. Digital holographic microscopy (DHM) is a well-established, but computationally intensive method for obtaining three-dimensional cell tracks from video microscopy data. We accelerate the analysis by an order of magnitude, enabling its use in real time. This technique opens the possibility of rapid identification of bacterial pathogens in drinking water or clinical samples.

Click here to access article ...

-

25 Mar 2024New Paper and GitHub Repo: Aloft: Self-Adaptive Drone Controller Testbed,

Aerial drones are increasingly being considered as a valuable tool for inspection in safety critical contexts. Nowhere is this more true than in mining operations which present a dynamic and dangerous environment for human operators. Drones can be deployed in a number of contexts including efficient surveying as well as search and rescue missions. Operating in these dynamic contexts is challenging however and requires the drones control software to detect and adapt to conditions at run-time.

In this paper we describe a controller framework and simulation environment and provide information on how a user might construct and evaluate their own controllers in the presence of disruptions at run-time.

A virtual machine containing the artifact can be found here: Aloft GitHub repo