Research Summary

Currently, I am a Senior Researcher (Research Fellow) in the Dept of Computer Science. I work on a number of projects in through-life safety assurance of AI for autonomous systems with particular focus on safe robotics platforms, and assuring AI and robots in uncertain environments. This builds on my last role as a senior software engineer developing software for assuring the safety of autonomous systems.

Previously, I was a Research Project Manager in the Dept of TFTI developing machine learning for sports data analytics and narrative generation.

Before that I was a Senior Researcher and software developer producing a data analytics platform to process sensor data from robots using leading-edge artificial intelligence algorithms. The software identified anomalies in streamed data to allow monitoring of infrastructure and environments, and search & rescue missions. A deep reinforcement learning algorithm used the sensor data to navigate the robot to the site of the anomaly using mapless navigation. I also researched the safety assurance of robot geo-location and navigation for Industry 4.0 applications such as reconfigurable factories.

I was also a Research Fellow and software developer for digital games. I co-developed a data analytics platform for esports data including a tool for win prediction in esports matches. I worked on the NEMOG Project, where I focused on data mining for collective game intelligence and business models for digital games. I investigated issues around gathering data; and developed new algorithms to mine the data.

Initially I was a researcher on a number of projects including the NEWTON Project to develop an autonomous, intelligent system for structural health and condition monitoring of railways using anomaly detection. The Carmen/YouShare projects developed a web-based portal for users to run compute-intensive research. I also worked on the Freeflow project to develop software systems for monitoring road traffic patterns. These systems used real-time data analytics to provide intelligent decision support to traffic managers.

Interests

- Anomaly Detection

- Artificial Intelligence

- Machine Learning

- Neural Networks

- Robotics

- Safety Assurance

Research Projects

-

ISA Solar Farm Project

Autonomous Robots in the Field: Enhancing Solar Farm Safety and Efficiency

The robotics platform will integrate multiple robots to safely navigate, inspect and maintain a solar farm. This includes programming robots to sense their environment; understand their state and environment from what they sense; decide what to do according to their current state and goal; and perform the chosen action. This all has to be done safely even though the real world is ever-changing and the robot will have to understand and handle situations it has never encountered before.

Solar farms need to be maintained to ensure they operate efficiently. The project will develop:

- A safety-assured, AI-driven robotics platform for solar farm inspection and maintenance.

- Novel system/safety lifecycle for autonomous robots operating in uncertain environments and with humans.

- Methods to ensure harms & risks to humans are considered throughout the lifecycle.

- Safety assured systems, tools & processes from simulation → lab → real-world.

- New AI for detecting “unsafeness”.

- New evidence gathering methods.

- New approaches for ensuring safety guarantees are met: at the component and system levels.

-

ASUMI Project – Principal Investigator

The project assured the safety of UAVs for mine inspection.

Uncrewed aerial vehicles (UAVs) are ideal for inspecting infrastructure such as mines. However, the use of UAVs in real-world environments can cause harm to humans, damage the UAVs, and damage infrastructure. Hazards may be caused directly by the UAV if it collides with objects in the environment, or if the UAV fails to complete a mission and needs to be recovered. We need to provide assurance that their use will not cause harm.

- The three project partners developed, defined, and validated safety requirements and a safe operating concept for UAVs performing mine inspections. This is to ensure safe operation and guarantee early intervention where required.

-

ASPEN Project – Co–I

ASPEN developed and assured autonomous systems for forest inspection.

Forest protection is essential to mitigating climate change, and a major objective of the Forests and Climate Leaders’ Partnership established at COP27.

Autonomous Systems for Forest ProtEctioN (ASPEN) developed and trialled an integrated framework for the autonomous detection, diagnosis and treatment of tree pests and diseases. ASPEN was a multidisciplinary project between 4 UK universities, the UK Forestry Commission and industry partners, funded by the UKRI Trustworthy Autonomous Systems Hub.- ASPEN aimed to advance the sociotechnical feasibility of managing tree health through improving the application of machine learning to remote sensing forest data, trialling autonomous sampling for diagnosis and treatment deployment, and crystallising the governance context of these systems.

-

DAISY Project

The DAISY project will develop a diagnostic AI System for robot-assisted A&E triage.

DAISY, which stands for Diagnostic Artificial Intelligence System, is a pilot prototype humanoid device, designed to assist with the initial clinical triage assessments routinely carried out when patients attend the emergency department. The aim is to explore whether DAISY’s advanced digital technology can enhance these processes.

- The system provides instructions to patients on how to use medical equipment to measure their own vital signs. DAISY will ask patients a series of health-related questions, gathering important data such as symptoms, body temperature, and pulse rate. All this information is then analysed and compiled into a clinical report, which is intended to support staff in their assessment of the patient.

- Only patients who are willing, consent and can use DAISY will be invited to try it.

-

Factories in the Cloud Project

The project analysed the safety assurance of UWB geofencing for Industry 4.0.

As manufacturing becomes increasingly automated and increasingly dynamic at every stage of operation, there is a move to dynamic factories. These factories manufacture a broad range of products using frequent reconfiguration of the factory layout to optimise production, efficiency and costs. A key facet of this development is Industry 4.0 digitization (often known as the next industrial (or data) revolution). Industry 4.0 digitization requires smart technologies to realize this flexibility and automation. As factories and warehouses move to embrace Industry 4.0, they are using robots in ever increasing numbers, working in robot teams and in cooperation with humans at various stages of the production line. To ensure industry 4.0 safety, these robots need to be tracked and be able to autonomously navigate dynamic environments safely.

- We analysed the safety of Ultra-Wideband (UWB) ranging technology and COTS tagging hardware to track the robots and to ensure they do not enter protected (danger) zones by issuing an emergency stop command if they are likely to encroach. We used specialised mapping software to set up the danger zones and to geo-locate the robots. How reliable is this system?

** Photo by Markus Burkle from Pexels

-

Reconfigurable Sensors for UAVs (Drones) Project

The project combined AI & robotics to develop a UAV (drone) with reconfigurable sensors for monitoring of infrastructure and environments, and search & rescue.

- The Adaptive and Autonomous Robotics Module (AARM) Project developed a mobile robotics module and monitoring software for UAVs (drones). AARM can analyse buildings, infrastructure and environments, and perform search & rescue

The module is a set of hot-swappable sensor and processing plates that clip together, attach to the drone, communicate with each other and send their data for on-site analysis. The plates can be configured into a module either by a user or by a robotic arm. The hot-swappable plates can be reconfigured or faulty plates replaced as needed.

The plates stream their data for analysis by the latest artificial intelligence (AI) technologies. Our cutting edge AI software detects anomalies and guides the drone using a novel deep reinforcement learning algorithm for mapless robot navigation.

AARM has the potential to work in conjunction with:

- UAVs (drones),

- UGVs (robots),

- to work in swarms of robots and drones,

- to incorporate telepresence to allow remote interactive operation, and

- to generate real-time augmented reality (AR) displays of buildings, infrastructure and environments for human operators. Overlaying sensor analyses, heatmaps and alerts onto video images from the drone in real-time.

Download our flyer to find out more ... (PDF format)

-

NEMOG Project

The NEMOG Project focused on data mining for collective game intelligence. NEMOG used game analytics to unlock the potential for scientific and social benefits in digital games.

The NEMOG project aimed to unlock the potential for scientific and social benefits in digital games. The numbers of games sold and the numbers of game hours played mean that we only need to persuade a small fraction of the games industry to consider the potential for social and scientific benefit to achieve a massive benefit for society, and potentially to start a movement that will lead to mainstream distribution of games aimed at scientific and social benefits.

NEMOG analysed the current state of the digital games industry, by engaging directly with games companies and with industry network associations. It conducted research into sustainable business models for digital games, and particularly for games with scientific and social goals. These showed how businesses can start up and grow to develop a new generation of games with the potential to improve society.

Every action in an online game, from an in-game purchase to a simple button push, generates a piece of network data. This is a truly immense source of information about player behaviours and preferences. We explored what online data is available now and might become available in the future, investigated the issues around gathering such data, and developed new data mining algorithms to better understand game players as an avenue for making better games, societal impact and scientific research.

The NEMOG Project was an inter-disciplinary collaboration between research teams from York, Durham and Northumbria Universities and CASS Business School at City University London in partnership with industrial collaborators.

** Image from: The Science in Video Games!

-

NEWTON Project

NEWTON aimed to develop an autonomous, wireless sensor network and intelligent system for condition monitoring and anomaly detection in structures and infrastructure.

NEWTON produced and evaluated a low–cost wireless sensor network and intelligent monitoring system for corrosion and stress. The system had specific applications in condition monitoring and anomaly detection of railways and in–service Non–Destructive Evaluation (NDE) for nuclear applications. It also provided rapid inspection of: anomalies in large structures, new materials, ageing infrastructures, safety critical systems for aircraft, power plants, oil/gas/water pipelines and offshore infrastructure.

The NEWTON Project work was undertaken jointly by cross–disciplinary research team from Newcastle, Sheffield and York Universities, in collaboration with industrial partners.

NEWTON investigated:

- low cost and low power consumption sensor technologies,

- novel approaches of RFID–based passive sensing networks,

- spectrally efficient and reliable communications,

- autonomous data fusion and feature extraction,

- signal processing methods for nonlinear system identification and analysis,

- outlier and anomaly detection in wireless sensor data,

- cloud–based computing architectures and decision making,

- intelligent system management.

York NEWTON work:

- Hadoop architecture and support for networking. At University of York, we developed a cloud–based software architecture for data storage, management and analysis using Apache Hadoop.

- Outlier and anomaly detection. At University of York, we investigated finding outliers in wireless sensor data to allow anomaly detection and condition monitoring of infrastructure.

** Metal Rail Bridge image from: FreeDigitalPhotos.net

-

FREEFLOW Project

FREEFLOW developed data mining and decision support tools for traffic operators and individual travellers. It aimed to improve traffic management and operation by turning data into intelligence

Traffic monitoring systems are collecting ever more detailed and timely data about transport networks. These data were integrated and mined using tools that: detected patterns and anomalies in traffic data; and, used pattern matching to find similar historical patterns. We then took suitable measures to assist traffic network operators and to improve the current traffic flow using both historical knowledge and traffic modelling.

The FREEFLOW Project involved 15 partners from academia, industry and government.

The FREEFLOW Project operated on three sites.

- In London, FREEFLOW analysed Park Lane and Hyde Park Corner, a key London route which is as busy as many UK motorways, and selected appropriate traffic signal settings and other measures to mitigate congestion.

- In Maidstone, the project focused on managing the urban / inter–urban interface and displaying sets of messages on variable message signs to improve travel times.

- Work in York focused on bus punctuality and selecting the most appropriate traffic signal plans and messages to display to maintain punctuality.

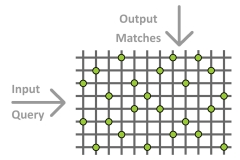

Data are stored and matched

At University of York, we developed a k-nearest neighbour based pattern matching tool using neural networks. The tool was based on Correlation Matrix Memories (CMMs) which are binary associative neural networks. CMMs can store large amounts of data and allow fast searches.We converted traffic data variables (such as data from sensors embedded in the road or from buses) into vectors using a quantisation process. These vectors were then stored in a historical database of vectors in the CMM.

As new traffic data were generated, we turned these new data into query vectors using the quantisation process. We applied the query vector to the neural network to find the k best matching historical time periods using kernel–based vector similarity and incorporating spatio-temporal aspects.

Finally, we provided advice to the traffic operator by cross-referencing operator logs for traffic control interventions made during the k best matching time periods; calculated a quality score for each of these interventions (how well it worked); and, thus, recommended to the operator the intervention likely to be most effective for the current situation. We also used the neural network to predict variable values to plug gaps in the data; to overcome a sensor failure; or to look ahead and anticipate congestion problems. We extrapolated and produced a prediction of the future traffic value by averaging the variable value across the set of matches retrieved by the neural network.

** Image shows: Traffic at night by Petr Kratochvil

-

CARMEN Project

The Carmen project developed a web–based portal for users to run compute-intensive research while recording what they had done. It used a three–tier architecture and a cloud-based infrastructure.

The Carmen project developed a web-based portal for users to run compute-intensive research while recording what they had done. I worked on all aspects of the portal’s three–tier architecture. The back-end was built in Java and SQL to manage data storage, security and service enactment across a cloud-based infrastructure. I also collaborated on the front-end web interface developed in Javascript.

The CARMEN Virtual Laboratory (VL) is a cloud–based platform which allows neuroscientists to store, share, develop, execute, reproduce and publicise their work.

Carmen produced an interactive publications repository. The repository allows users to link data and software to publications and means that other users can examine data and software associated with the publication and execute the associated software within the VL using the same data as the authors used in the publication.

The cloud–based architecture and SaaS (Software as a Service) framework allows users to upload and analyse vast data sets using software services. This new interactive publications facility allows others to build on research results through reuse. It aligns with recent developments by funding agencies, institutions, and publishers with a move to open access research. Open access provides reproducibility and verification of research resources and results. Publications and their associated data and software will be assured of long-term preservation and curation in the repository.

Further, analysing research data and the evaluations described in publications frequently requires a number of execution stages many of which are iterative. The Carmen VL provides a scientific workflow environment to combine software services into a processing tree. These workflows can also be associated with publications and executed by users.

The VL provides a secure environment where users can decide the access rights for each resource to ensure copyright and privacy restrictions are met.